Introduction

As a website owner or website developer, webmaster it's crucial to have a comprehensive understanding of how your site performs in search engines. The search engine has a lot of tools to get the site performs but One powerful tool that provides valuable insights into your website's presence on Google is the Google Search Console. This free web service offered by Google empowers website owners to monitor, maintain, and optimize their websites to perform better in search results. One of the most valuable features of the Google Search Console is its Crawl reports. These google search console crawl reports enable you to monitor how Google's web crawlers interact with your site and identify potential issues affecting your site's performance in search results.

Setting Up the Google search console

To leverage the benefits of Google Search Console Crawl reports, you need to start by setting up your website in the Search Console.Follow these steps:

- Access the Search Console: Visit the Google Search Console website and log in using your Google account.

- Add a Property: Click on the "Add a Property" button and enter your website's URL. Verify ownership by following the provided instructions.

- Submit a Sitemap: After verification, submit your website's sitemap to Google. This enables Google to understand your site's structure and content better.

Read also : How to create and verify website in google search console?

Understanding Google Search Console

What is Google Search Console?

Google Search Console, formerly known as Google Webmaster Tools, is a collection of tools and reports provided by Google to help website owners understand how their site is seen by the search engine. It offers valuable data and insights that aid in optimizing a website's performance on Google Search. By using the Search Console, webmasters can analyze search traffic, monitor website rankings, submit sitemaps, and identify and fix issues that may impact the site's visibility.

Why is it important for website owners?

For any website to succeed and attract organic traffic, it must be visible in search results. The Search Console helps website owners ensure that their site is being indexed by Google and is free from any crawl or indexing errors. It also provides valuable data on the website's organic search performance, allowing owners to make informed decisions to improve its visibility and user experience.

Understanding of Google Search Console Crawl Reports

What are Crawl Reports?

Google Search Console crawl reports provide a detailed overview of how Google's web crawlers, also known as Googlebot, interact with your website. Crawling is the process by which search engines navigate through the web, visiting and analyzing various web pages. The google search console Crawl Reports display information about how often Googlebot accesses specific pages on your site, the response codes it receives, and any potential issues encountered during the crawling process.

How do they work?

When Googlebot crawls a website, it analyzes the content and structure of each page. The data collected during this process is then used to update Google's index and rank pages in search results. Crawl Reports give website owners insights into which pages were crawled, how often they were crawled, and whether any errors were encountered during the process.

Monitoring Crawl Issues

Identifying crawl errors and issues

Google Search Console Crawl Reports can highlight various crawl errors that may hinder Googlebot from properly accessing certain pages on your website. These errors include:

Crawl Error Reports

In the google search console crawl reports shows you the pages on your website that Googlebot had trouble crawling. It is essential to keep an eye on this report to identify and fix issues that might prevent search engines from accessing and indexing your content correctly. Common crawl errors include "Page not found" (404 errors) and server errors (5xx errors). By addressing these errors, Googlebot cannot access the server hosting the website and you ensure that your site is effectively crawled and indexed.

- Soft 404 Errors: It means , Suggest that a page doesn't exist but returns a "200 OK" status code.

- 5xx Server Errors: These errors stem from server-side issues. Investigate your server logs, and work with your hosting provider to fix any server-related problems.

- Redirect Errors: Incorrect redirects can confuse Googlebot. Ensure your redirects are properly configured, and avoid redirect chains.

- Sitemap Errors: If your sitemap contains errors, Google may not crawl your site accurately. Use the Search Console's Sitemap Report to identify and fix issues.

Crawl Stats Reports

Google search console crawl reports provides valuable data about Googlebot's activity on your site over the last 90 days. It includes a lot of information such as the number of pages crawled per day, kilobytes downloaded, and time spent downloading a page use this report for anlaysis. This data can help you understand how frequently Googlebot visits your site and how much resources it consumes. Unusual spikes or drops in crawl activity may indicate issues with your website or server.

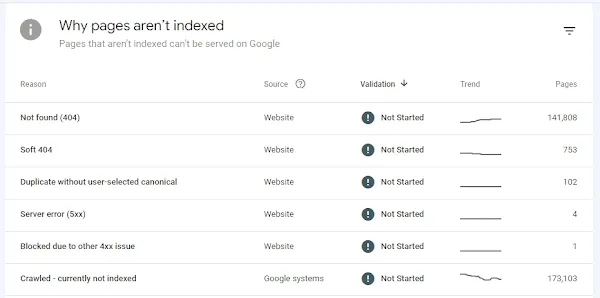

Coverage Report

The coverage report shows a comprehensive list of all the pages Google has crawled on your site and provides details about their indexing status. This report categorizes pages as "Valid," "Error," "Excluded," or "Duplicate." Understanding this report can help you identify potential issues that might affect your site's visibility in search results. For example, you can check if important pages are marked as "Valid" and ensure no critical content is excluded or blocked from indexing.

Sitemap Report

In this report, you can submit and monitor sitemaps that help Googlebot discover and crawl your website's pages more efficiently. By submitting a sitemap, you can ensure that Google is aware of all the important pages on your site and is keeping them updated in its index.

Fetch as Google

This feature allows you to see how Googlebot renders a specific URL on your site. It helps you identify any rendering issues or content that might be inaccessible to the crawler. If Googlebot can't access certain elements or content on a page, it may not be indexed or displayed correctly in search results.

Robots.txt Tester

The robots.txt file instructs search engines about which parts of your site should not be crawled or indexed. The Robots.txt Tester in Search Console helps you validate your robots.txt file and check for any potential issues that might block Googlebot from crawling important pages.

How to interpret crawl data

Interpreting crawl data allows website owners to understand how their site is being perceived by Google. By analyzing crawl patterns and error reports, webmasters can identify potential issues affecting their website's performance in search results. Addressing these issues promptly can lead to improved crawlability and better rankings on Google Search.

Improving Website Crawlability

Best practices for optimizing crawlability

To enhance crawlability and ensure that Googlebot can efficiently navigate and index your website, consider the following best practices:

- XML Sitemap: Create and submit an XML sitemap to Google Search Console to help Googlebot discover and index your pages effectively.

- Optimize Internal Linking: Ensure a clear and logical internal linking structure to help Googlebot navigate through your site.

- User-Friendly URLs: Use descriptive and user-friendly URLs that reflect the content of each page.

- Mobile-Friendly Design: Ensure your website is mobile-friendly to cater to the growing number of mobile users.

Utilizing sitemaps and robots.txt

Sitemaps play a crucial role in assisting search engines in understanding the structure of your website. They provide a roadmap for crawlers, indicating which pages are essential and how frequently they are updated. On the other hand, the robots.txt file tells search engine crawlers which pages or sections of your site should not be crawled or indexed.

Enhancing Website Performance

Understanding website performance metrics

In addition to crawl-related data, the Google Search Console also provides performance reports. These reports offer insights into how your website is performing in search results. Metrics such as impressions, clicks, click-through rates, and average position can give valuable information about your site's visibility and user engagement.

Using Google Search Console Crawl Reports to improve performance

Crawl Reports and performance data work hand in hand to identify opportunities for website improvement. By comparing crawl data with performance metrics, webmasters can identify which pages need optimization to attract more organic traffic and improve overall user experience.

Utilizing Crawl Stats for Optimization and Indexing

Analyzing crawl stats for insights

Crawl Stats in the Google Search Console crawl reports give detailed information about Googlebot's activity on your site. It includes statistics on pages crawled per day, kilobytes downloaded, and time spent downloading a page. Analyzing these stats can provide insights into how efficiently Googlebot crawls your site and whether there are any potential crawl issues. LSI keywords like "Googlebot activity analysis" and "website crawl statistics" can assist in optimizing your site.

- Crawl Requests: Analyze the number of pages requested per day to understand Googlebot's interest in your content. A higher crawl rate can indicate a strong site presence.

- Response Codes: Review the response codes to identify potential issues. A surge in 5xx codes might indicate server problems, while an increase in 429 codes indicates that your site is hitting the crawl rate limit.

- File Types Crawled: Learn which file types Googlebot crawls most frequently. This insight can help you focus on creating more of the preferred content types.

- Crawl Time: Observe the average time spent downloading a page. Slow crawl times can affect indexing and rankings, so optimize your site for faster loading.

Monitoring website indexing status

In addition to crawl stats, website owners can also use the google Search Console crawl reports to monitor their website's indexing status. This information allows webmasters to see which pages have been indexed and which ones might be facing indexing problems.

Tracking Security Issues

Detecting and resolving security concerns

The Search Console also helps in monitoring website security. It can detect potential security issues, such as malware or hacked content on your site, and notify you promptly. Addressing these concerns ensures that your website remains safe and trustworthy for visitors.

Ensuring website safety and trustworthiness

A secure website is critical for building trust with users and search engines alike. By using the Search Console to stay vigilant about security issues, website owners can maintain a positive reputation and safeguard their online presence.

Understanding URL Inspection

What is URL Inspection?

URL Inspection is a feature in the Google Search Console that allows website owners to check how Google sees a specific URL on their site. By entering a URL, webmasters can view the index status, any crawl errors, and the rendered version of the page as seen by Google.

How to use it effectively

Webmasters can use URL Inspection to diagnose issues with individual pages and understand how Googlebot interacts with them. By regularly inspecting important URLs, website owners can ensure that critical pages are being properly crawled and indexed.

URL Inspection for Individual Pages

The URL Inspection tool within the Search Console allows you to examine how Google sees a specific page on your website. LSI Keywords like "URL analysis in Google search console" are essential for effective utilization.

- Page Indexing Status: Check if the page is indexed by Google. If not, you can request indexing to ensure your content appears in search results.

- Coverage and Errors: The URL Inspection report highlights any indexing issues and provides details on why a page might not be indexed.

- Canonicalization: Confirm that your canonical URLs are set correctly to prevent duplicate content issues.

- Crawl and Index Date: Find out when Google last crawled and indexed the page. Frequent updates indicate a dynamic site with fresh content.

Staying Informed with Crawl Notifications

Receiving notifications about crawl issues

The Search Console allows website owners to receive email notifications when significant crawl issues are detected. By promptly addressing these notifications, webmasters can prevent potential negative impacts on their website's performance and visibility.

Taking timely actions to resolve them

Being informed about crawl issues empowers website owners to take timely actions to resolve them. This proactive approach ensures that potential problems are addressed promptly, contributing to a smoother and more efficient website performance.

Monitoring Mobile Usability

Mobile-friendliness and its impact on rankings

With an increasing number of users accessing websites on mobile devices, mobile-friendliness has become a crucial factor for SEO. The Google Search Console provides a Mobile Usability report that highlights mobile-related issues that could affect a website's rankings on mobile search results.

Using Crawl Reports for mobile optimization

By analyzing crawl data specific to mobile devices, webmasters can identify and address mobile usability issues. This optimization enhances the user experience for mobile users and positively impacts search rankings.

Leveraging Performance Reports

Analyzing website traffic and user behavior

Performance Reports in the Search Console offer a comprehensive view of website traffic and user behavior. It provides data on the search queries that led users to your site, the pages that attract the most traffic, and user engagement metrics.

Making data-driven decisions for growth

By leveraging performance data, website owners can make data-driven decisions to enhance their online presence. Understanding user behavior and preferences can lead to targeted improvements that resonate with the target audience.

Troubleshooting Common Crawl Problems

How to address common crawl-related issues

Several common crawl issues can hinder a website's performance in search results. These include duplicate content, thin or low-quality content, and server misconfigurations. By identifying and resolving these issues, webmasters can improve their site's crawlability and overall SEO.

Avoiding potential pitfalls

While optimizing for crawlability is essential, it's also crucial to avoid aggressive SEO practices that violate Google's guidelines. Engaging in such practices can lead to penalties, causing your site's rankings to plummet.

Conclusion

In conclusion, Google Search Console Crawl reports are indispensable tools for website owners looking to optimize their site's visibility in search results. These reports offer valuable insights into how Google's web crawlers interact with your website, enabling you to identify and address potential crawl issues. By proactively optimizing your site's crawlability and addressing any errors or security concerns, you can ensure that your website performs at its best and attracts organic traffic from search engines.

FAQs

What is Google Search Console?

Google Search Console is a free web service provided by Google that helps website owners monitor and optimize their site's presence in Google Search.

How can Crawl Reports help improve website performance?

Crawl Reports provide data on how Google's web crawlers interact with your site, allowing you to identify and resolve crawl issues that may impact your site's performance in search results.

What are some common crawl-related issues to watch out for?

Common crawl issues include server errors, 404 errors, soft 404 errors, and blocked URLs. Addressing these issues can improve your website's crawlability.

How does URL Inspection work?

URL Inspection in the Search Console allows website owners to check how Google sees a specific URL on their site, including index status and crawl errors.

Why is mobile-friendliness important for SEO?

With the increasing use of mobile devices for browsing, Google prioritizes mobile-friendly websites in mobile search results. Optimizing for mobile usability can improve your site's rankings.